Blog

2025.06.09

What If Your Coffee Mug Could Talk? Bringing Everyday Objects to Life with AR and AI

Fabrice Matulic

Researcher

Ever wanted to have a little chat with your coffee mug, or your toaster? Giving human-like qualities to inanimate objects is something many people do. We see faces in clouds, assign personalities to our gadgets, and sometimes talk to our plants. This natural tendency, known as anthropomorphism, got us thinking: what if the everyday objects around us could actually talk back, complete with faces and personalities, and interact not just with us but also with other objects in the surroundings?

In some ways, the “talking to us” part is already possible. We have smart devices that speak, and voice assistants like Alexa and Siri are common. These are great for functional tasks (“hey AI, what’s the weather?”), but these interactions are often transactional and the AI itself is usually a disembodied voice or a character on a screen, separate from the physical things we own and use.

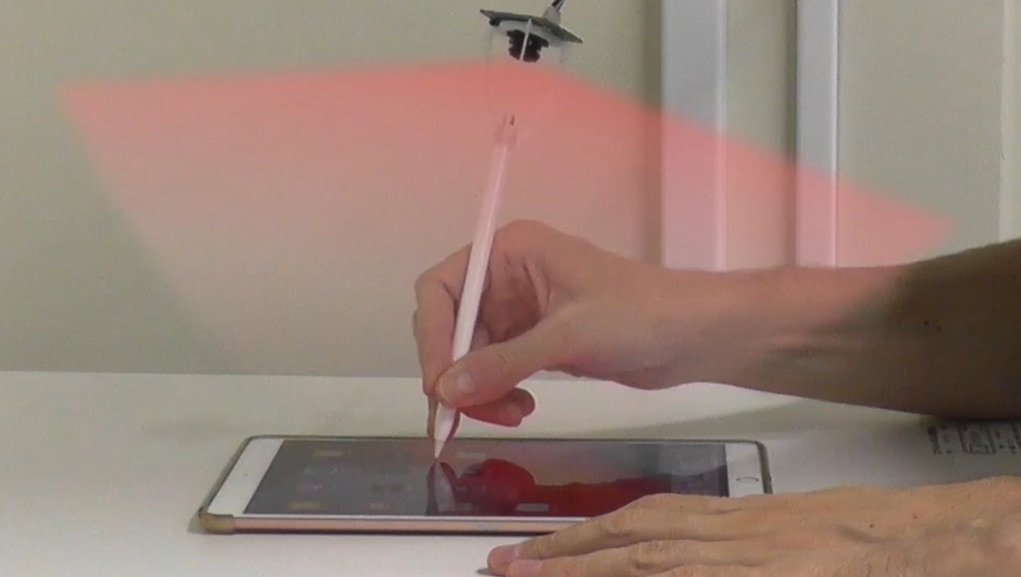

In this research that we presented at CHI 2025, we’re exploring something different: using Augmented Reality and AI to transform any household item into an interactive, talking character you can see and chat with. Imagine your sneakers greeting you in the morning, or your food having a debate about dinner plans, all with friendly animated faces visible right there on the objects through your phone or AR glasses.

The Spark of Life: How AI Gives Your Stuff a Soul (and a Face)

So, how does this work? When you indicate an object you want to interact with through your AR device, an image of the object is captured and analysed by a Vision Language Model. This VLM doesn’t just perform basic object recognition, it’s prompted to creatively dream up a unique character for that specific object. It considers the object’s appearance and what it’s used for to generate a fitting name, personality traits, and a conversational style. So, for example, a pack of biscuits might be assigned a sweet and comforting persona, while a cactus could get a prickly one. The VLM then picks out a suitable animated face from a library and a voice style that matches this personality.

And for an extra dash of uniqueness, the system can also use a 3D asset generation model to dynamically create custom headgear for each character, like quirky noodle pigtails for a cup of instant ramen or a raspberry-shaped hat for a pack of raspberry tartlets.

Once the character is “born”, a real-time Large Language Model uses the object’s personality, your spoken words, and what’s happening in the environment to generate dynamic and engaging dialogues, with a text-to-speech engine giving voice to the characters.

The great thing about this is that it works for virtually any object. We’re not limited to specially designed smart devices or items that need to be pre-registered. Point your phone at a cushion, a pair of headphones or a bottle of wine, and the object can immediately turn into an AR character you can chat with, imbued with a persona uniquely spun from its own physical being.

You can create multiple characters this way and the fun part is that, in addition to talking to you, they can also spontaneously engage in conversations between themselves. So if, for example, you’re curious what a chat between a disinfectant spray and a box of tissues might sound like, you can just sit back and listen to their banter. Given that the characters know which objects they represent, there’s a high chance that hygiene will be a topic in their dialogue.

More Than Just Words

Giving objects a voice and a face is one thing, but we wanted to make these interactions feel truly alive and grounded in the real world.

- Seeing is Believing: To increase dynamism and engagement, we made the faces expressive and fun, with cartoon-style visualisations reinforcing their emotional state. So, when your toothpaste character gets angry because you made a comment about its mintiness, you might see its face scowl, accompanied by some comical steam effects.

- Environment Awareness: Characters have a sense of their surroundings, thanks to the VLM periodically analysing the environment, and logging any changes to it. This log is used as a context prompt for the dialogue-creating LLM, so that changes in the environment can be reflected in the conversation. Thus, a cup on a messy desk might cheekily comment about the untidy state of your office. Similarly, characters can notice when new object-friends appear on the scene and greet them, or bid farewell if one disappears from view.

- Responding to You (and Your Actions): Beyond just chatting, the characters can also respond to how you interact with them and their physical object.

- With hand and object tracking, the system can detect if an object is picked up, and, thanks again to the context prompt influencing dialogue generation, the associated character can react accordingly. On a smartphone, characters can be “poked” by tapping them on the screen.

- If using an AR headset with eye-tracking capabilities, characters can react to your gaze, perhaps getting a bit flustered if you stare at them for too long. The system also detects if you approach or move away from them, possibly triggering reactions from characters to your changing proximity.

- Is that cup-noodle character a bit too chatty? Knock its physical container down to silence it (though other nearby characters might show concern that their friend has been rendered unconscious). If you feel sorry for it, set the character upright again to reanimate it.

- And for a truly novel interaction: bring two objects physically close to each other, and their characters can fuse into a brand new one. This fused character gets a blended personality and combined visual features, like merged headgear (Dragon Ball Z fans will be reminded of character fusion, but in our case, no need for a dance with weird poses to make it happen). For example, imagine a Spicy Curry Cup character and a Sweet Tartsy tartlet character merging into “CurryTart” with a uniquely spicy-sweet persona. When you’ve had enough of this combined character, just pull the physical objects apart, and they revert to their original selves.

The Bigger Picture

So, what’s the point of having your toaster give you a morning pep talk? Beyond the fun factor, we see some deeper potential:

- Entertainment and Storytelling: Imagine interactive narratives woven into your home, like your own personal Toy Story experience, or spontaneous comedy shows starring your household items. Who needs another sitcom when your salt and pepper shakers are having a stand-up routine right on your dining table?

- Education and Learning: Learning could shift from a passive activity to an active, conversational experience. Imagine historical artifacts in a museum not just being described by an audio guide, but personally recounting their rich stories with expressive, animated faces. Or a plant character enthusiastically explaining the principles of photosynthesis as you water it. By giving a voice and a face to the subjects of learning, abstract concepts can become more tangible, memorable, and fun, especially for younger learners.

- Companionship and Well-being: For people experiencing loneliness (a major issue in Japan), having friendly, familiar objects to interact with may provide comfort and companionship. A coffee maker character could notice its owner is drinking more coffee than usual and thoughtfully inquire about their fatigue, much like a concerned friend. This could foster a deeper sense of connection between people and their personal belongings and spaces.

- A Friendlier Smart Home: Interacting with IoT devices and smart appliances could become more intuitive and enjoyable. Instead of barking commands at a disembodied voice assistant, users could have a friendly chat with their smart lamp about the lighting, or a thermostat character might ask if people aren’t feeling chilly (all with friendly faces to make the conversations more relatable).

What’s Ahead

We’ve just scratched the surface and we’re excited about the possibilities this research uncovers. The idea of transforming any physical object into an interactive, personality-rich AR character, automatically and dynamically, is just the beginning. There’s still much to explore, from making the conversations even deeper and more knowledgeable (thanks to the constant advances of AI), to allowing users to customise character personalities and appearances in different ways. We also want to conduct longitudinal user studies to get a better understanding of how these interactions actually affect people in their daily lives.

Ultimately, with this research, we seek to create deeper, more affective relationships with the objects that surround us, making them feel less like passive tools and more like active, engaging participants in our environments. We’re interested in investigating how this blend of AI and AR can lead to a future where our interactions with the digital and physical worlds are more seamless, intuitive, and, importantly, more socially and emotionally intelligent. Who knows, maybe your future best friend is currently sitting on your kitchen counter!